Right? I was too lazy to double check, but yeah, the original claim seems absurd considering it’s missing at least the top 5 most populated countries representing nearly 4 billion people.

Right? I was too lazy to double check, but yeah, the original claim seems absurd considering it’s missing at least the top 5 most populated countries representing nearly 4 billion people.

Would anything have prevented an increase in rates? I’d bet if everyone got out of line, the rate increases would have been the same or higher. The only difference would be no one received $100.

I haven’t pumped gas in 3 years and it’s glorious.

Sure, I agree.

Unfortunately, no such solution currently exists or has been widely adopted.

I use an app called Recipe Keeper. It’s amazing because I just share the page to the app, it extracts the recipe without any nonsense, and now I have a copy for later if I want to reuse it. I literally never bother scrolling recipe pages because of how terrible they all are, and I decide in the app if the recipe is one I want to keep.

It also bypasses paywalls and registration requirements for many sites because the recipe data is still on the page for crawlers even if it’s not rendered for a normal visitor.

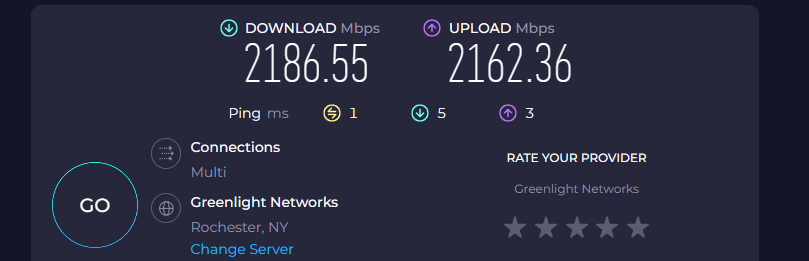

You’re in the wrong Rochester!

Thanks for the clarification and I believe I misunderstood your original comment.

To add to your list there is an often underutilized feature of GitHub for discussions too.

You mean pretty much a single GitHub account?

Also your quick question may have already been asked and answered but difficult to find on Discord. Or if it hasn’t been asked yet, now a future person can’t discover the same question easily. So either way you’re just wasting other people’s time.

I recently went through these exact pains trying to contribute to a project that exclusively ran through Discord and eventually had to give up when it was clear they would never enable issues in their GitHub repos for “reasons.”

It was impossible to discover the history behind anything. Even current information was lost within days, having to rehash aspects that were already investigated and decided upon.

It’s not skippable as far as I can tell. It also frequently advertises shows I’ve already watched. Sometimes it advertises the show I’m trying to watch.

I’m pretty sure it also has the “ad counter” showing on the screen during this as well.

Here’s what they call it in their docs:

You’ll also see a quick preview only once per day before any show to keep you up-to-date on our original programming.

It’s not an ad, it’s a “preview.” /s

Yes, at least currently. There may be better options as multi-gigabit internet access becomes more common place and commodity hardware gets faster.

The other options mentioned in this thread are basically toys in comparison (either obtaining results from existing search engines or operating at a scale less than a few terabytes).

It’s a really interesting question and I imagine scaling a distributed solution like that with commodity hardware and relatively high latency network connections would be problematic in several ways.

There are several orders of magnitude between the population of people who would participate in providing the service and those who would consume the service.

Those populations aren’t local to each other. In other words, your search is likely global across such a network, especially given the size of the indexed data.

To put some rough numbers together for perspective, for search nearing Google’s scale:

A single copy of a 100PB index would require 10,000 network participants each contributing 10TB of reliable and fast storage.

100K searches / sec if evenly distributed and resolvable by a single node would be at least 10 req/sec/node. Realistically it’s much higher than that, depending on how many copies of the index, how requests are routed, and how many nodes participate in a single query (probably on the order of hundreds). Of that 10TB of storage per node, substantial amounts of it would need to be kept in memory to sustain the likely hundreds of req/sec a node might see on average.

The index needs to be updated. Let’s suppose the index is 1/10th the size of the crawled data and the oldest data is 30 days (which is pretty stale for popular sites). That’s at least 33PB of data to crawl per day or roughly 3,000Gbps minimum sustained data ingestion. For those 10,000 nodes they would need 1Gbps of bandwidth to index fresh data.

These are all rough numbers but this is not something the vast majority of people would have the hardware and connection to support.

You’d also need many copies of this setup around the world for redundancy and lower latency. You’d also want to protect the network against DDoS, abuse and malicious network participants. You’ll need some form of organizational oversight to support removal of certain data.

Probably the best way to support such a distributed system in an open manner would be to have universities and other public organizations run the hardware and support the network (at a non-trivial expense).

It’s the same inner voice speaking thoughts instead of words on a page.

Read this sentence one word at a time. As you read it, do you hear the words spoken inside your head?

I disagree. You should have validation at each layer, as it’s easier to handle bad inputs and errors the earlier they are caught.

It’s especially important in this case with email because often one or more of the following comes into play when you’re dealing with an email input:

I’m not suggesting that validation of an email should attempt to be exhaustive, but a well thought-out implementation validates all user inputs. Even the underlying API in this example is validating the email you give it before trying to send an email through its own underlying API.

Passing obvious garbage inputs down is just bad practice.

Yes, but no. Pretty much every application that accepts an email address on a form is going to turn around and make an API call to send that email. Guess what that API is going to do when you send it a string for a recipient address without an @ sign? It’s going to refuse it with an error.

Therefore the correct amount of validation is that which satisfies whatever format the underlying API requires.

For example, AWS SES requires addresses in the form UserName@[SubDomain.]Domain.TopLevelDomain along with other caveats. If the application is using SES to send emails, I’m not going to allow an input that doesn’t meet those requirements.

Why?

You should get 33% more pay as the full work force productivity would be 4/3 of the original in your example.

This difference might be clearer with an example where only half of the work force is required to match the original productivity. In this case, if the full work force continues to work, productivity is presumably doubled. That’s not a 50% increase. It’s 200% of the original or a 100% increase. So the trade-off should be between 50% fewer working hours and 100% more pay.

Of course, instead you’ll work the same hours for the same pay and some shareholders pocket that 100% difference.

How does it verify the command is valid? Does it run what I enter?

If so, just give it an infinite loop followed by some attempt at a tar command:

while true; do :; done; tar -xyz