What are your thoughts on #privacy and #itsecurity regarding the #LocalLLMs you use? They seem to be an alternative to ChatGPT, MS Copilot etc. which basically are creepy privacy black boxes. How can you be sure that local LLMs do not A) “phone home” or B) create a profile on you, C) that their analysis is restricted to the scope of your terminal? As far as I can see #ollama and #lmstudio do not provide privacy statements.

How fast are response times and how useful are the answers of these open source models that you can run on a low end GPU? I know this will be a “depends” answer, but maybe you can share more of your experience. I often use Claude sonnet newest model and for my use cases it is a real efficiency boost if used right. I once mid of last year tested briefly an open source model from meta and it just wasn’t it. Or do we rather have to conclude that we’ll have to wait for another year until smaller open source models are more proficient?

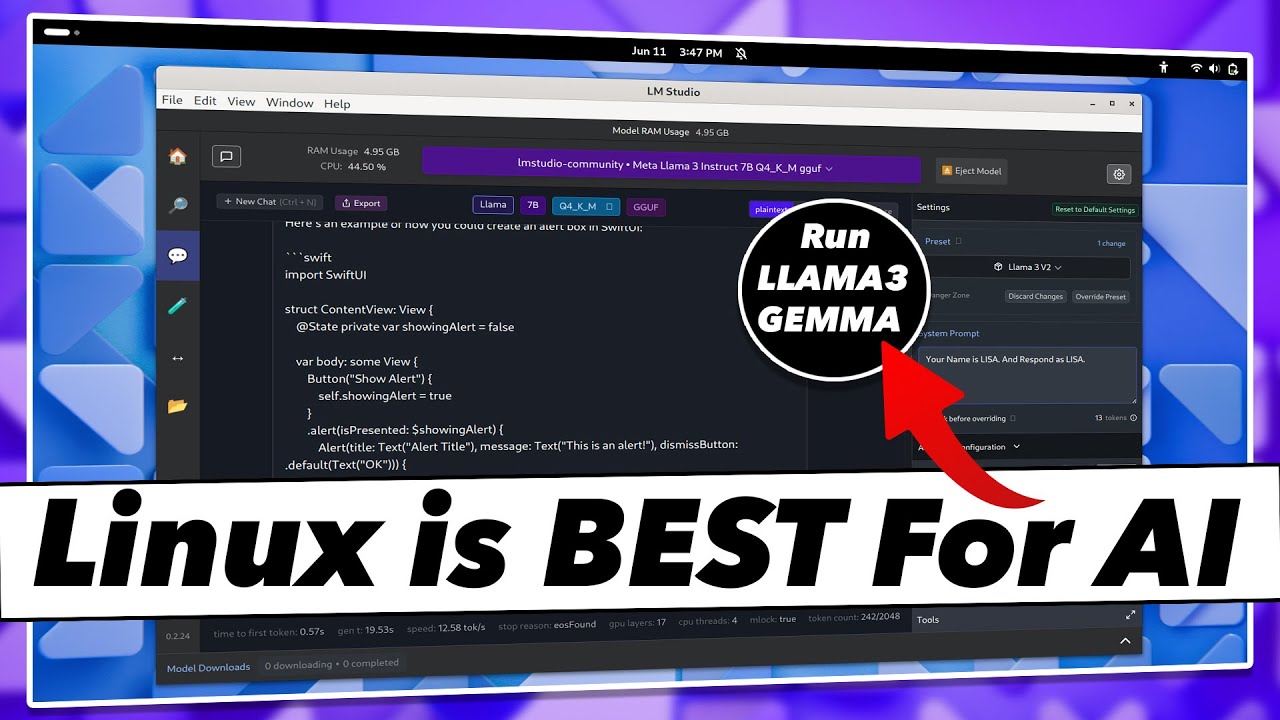

I’m still trying out combinations of hardware and models, but even my old Intel 8500T CPU will run around reading speed with a stock version of Meta’s Llama 3.2 3b (maybe the one you tried) with mostly good output—fine for rewriting content, answering questions about uploaded document stores etc.

There are thousands of models tuned for various purposes, so one of the key questions is your purpose. If you want to use your setup for something specific (e.g., coding SQL) you are going to be able to find a much more efficient model.