*in the US

I want to give it a go but the previous dev beta has wrecked my battery life, even after swapping to the public beta. From the notes though, it doesn’t seem like it’s too much of the exciting stuff.

I just can’t wait for Siri to have a semblance of intelligence.

Public beta and dev beta are often an identical build. Devs just get it first because they’re know how to dig out of a hole if the build is horribly wrong.

I wonder if they’re gonna offload Siri requests to the private compute infrastructure to bring some of the Apple Intelligence features to “older devices” (I’m looking at my memory constrained iPhone14 Pro Max for example).

Agree with the other poster that they won’t. Apple will almost surely constrain AI to their latest flagship’s hardware in some way. They already said AI itself is limited to the 15 Pro.

They won’t 😊✌🏻

Putting aside awful battery life, how do you feel about the new customization features?, specifically, do you like the new control center?

I’m running it now. If y’all have any questions about the build, what’s in / what’s out, etc, just ask!

Would you mind reporting back (in a few days) how is the battery life? I’m kinda concerned about it.

So far it still feels pretty beta-y. AKA, not great.

But that might also change over the coming days. I don’t know if the local model is doing any background indexing.

Oh, I guess it’ll take some time to them to get it polished. Btw, did you have to download the models or were them included within the update?

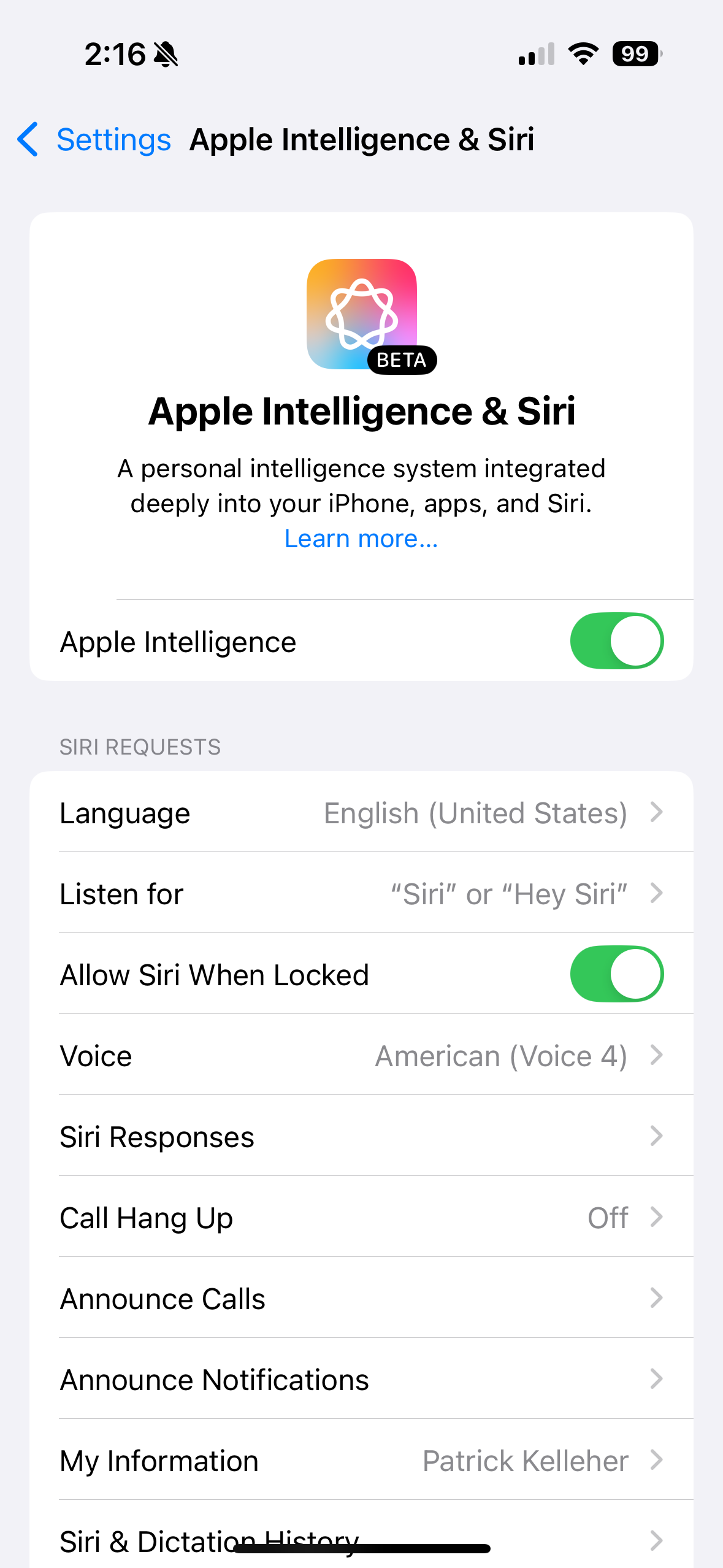

There is no separate install for Apple’s on-device models. You download the OS, go into settings, and opt into the Apple Intelligence waitlist. When you’re approved, it just works.

That said, Chat GPT and the Image Playground are not part of 18.1.

18.1 Beta? 18 isn’t even out yet

That is correct, but AI features have been pushed back to 18.1.

How do I opt out of this bullshit on my OS?

Right now it’s all opt-in.

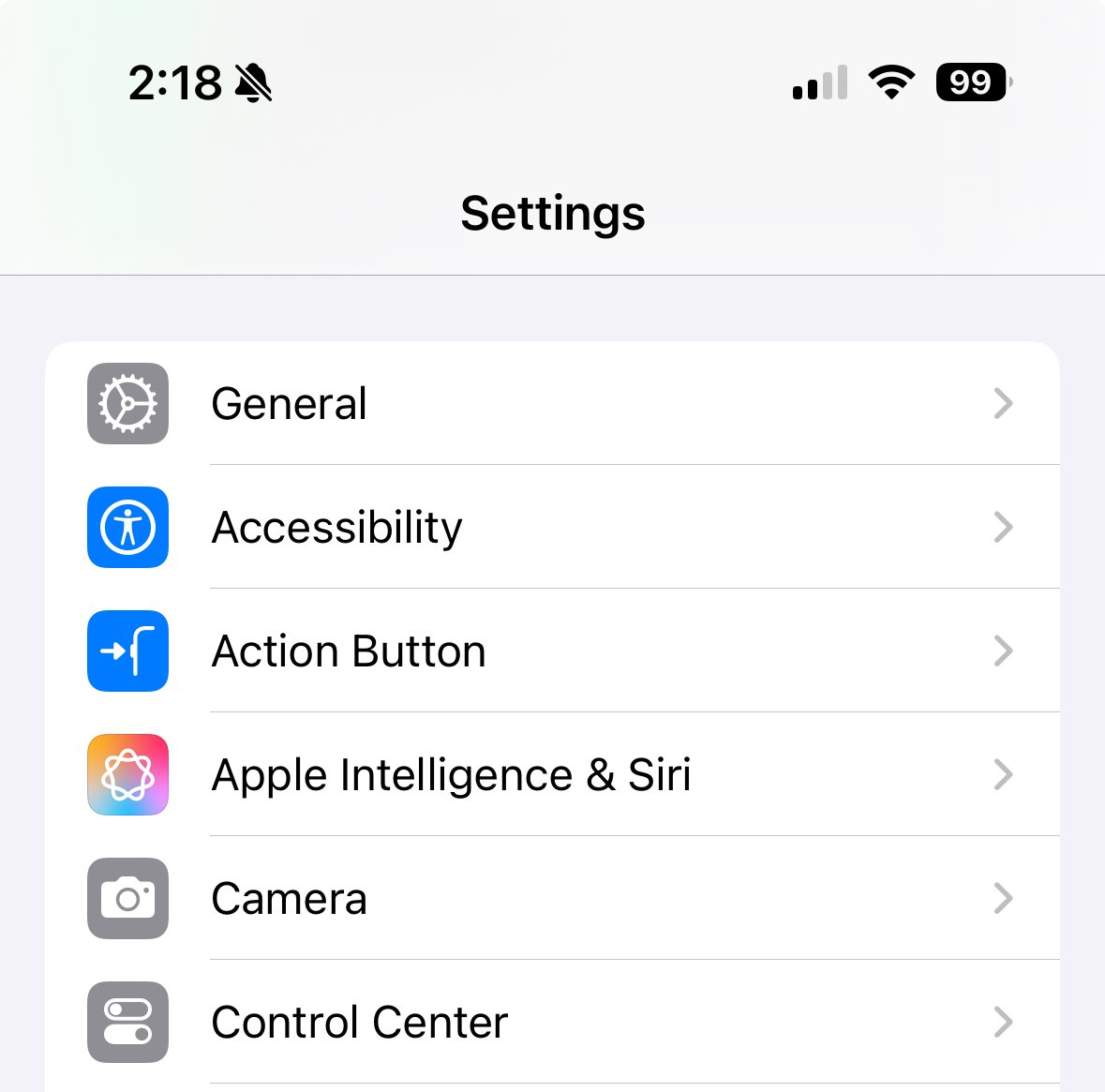

That said, when it is enabled, you can disable it via: Settings > Apple intelligence > off.

Apple’s implementation is much more privacy focused than others, so at least that’s not a concern here as compared to Android / Windows counter parts. However, if you really don’t want anything to do with it, then don’t upgrade to the new iOS/macOS; that’s probably your best bet if you want to stay behind.

Having said that, like it or not, this is the direction society is headed. Opting to be left behind is probably going to be detrimental in the long run. Your next device (as long as you remain anything remotely main stream) will have the newer OS installed, and have these features enabled by default. So may as well start embracing it now in the private Apple ecosystem, instead of throwing it all away at whatever other competitors’ ecosystem.

Yeah. I work in the ransomware response sector of IT Security. Frankly, I don’t need nor want this bloat on my devices. Hopefully I can find ways to remove it.

Ya, you have IT social skills I see.

Where you work and what you do hardly matters in this case — unless you choose to send your request to ChatGPT (or whatever future model that gets included in the same model), everything happens on device or in the temporary private compute instance that’s discarded after your request is done. The on device piece only takes Neural Engine resources when you invoke and use it, so the only “bloat” so to speak is disk space; which it wouldn’t surprise me if the models are only pulled from the cloud to your device when you enable them, just like Siri voices in different languages.

Don’t worry, I’m sure they have a setting for those scared of the future.